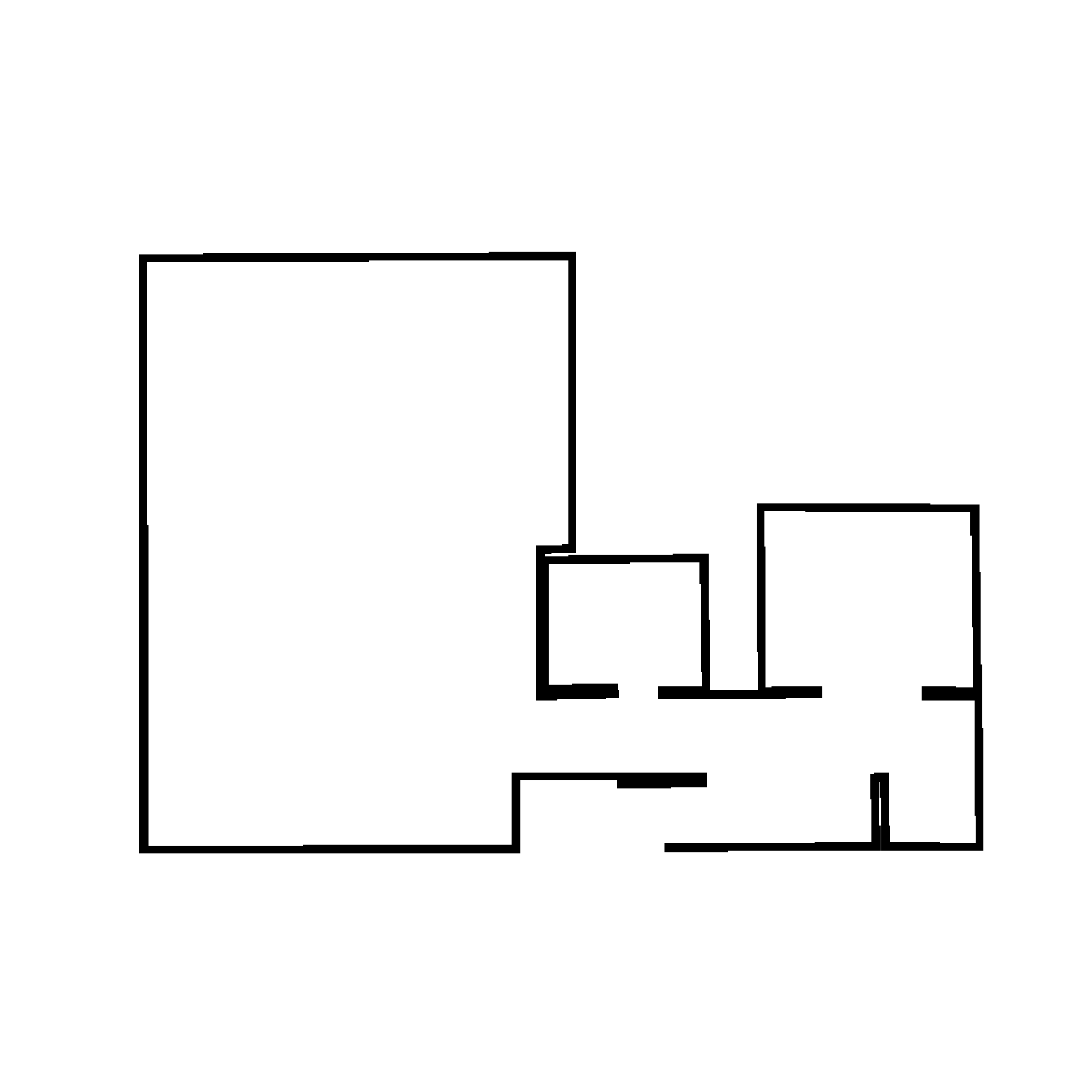

In this paper we propose an efficient data-driven solution to self-localization within a floorplan.

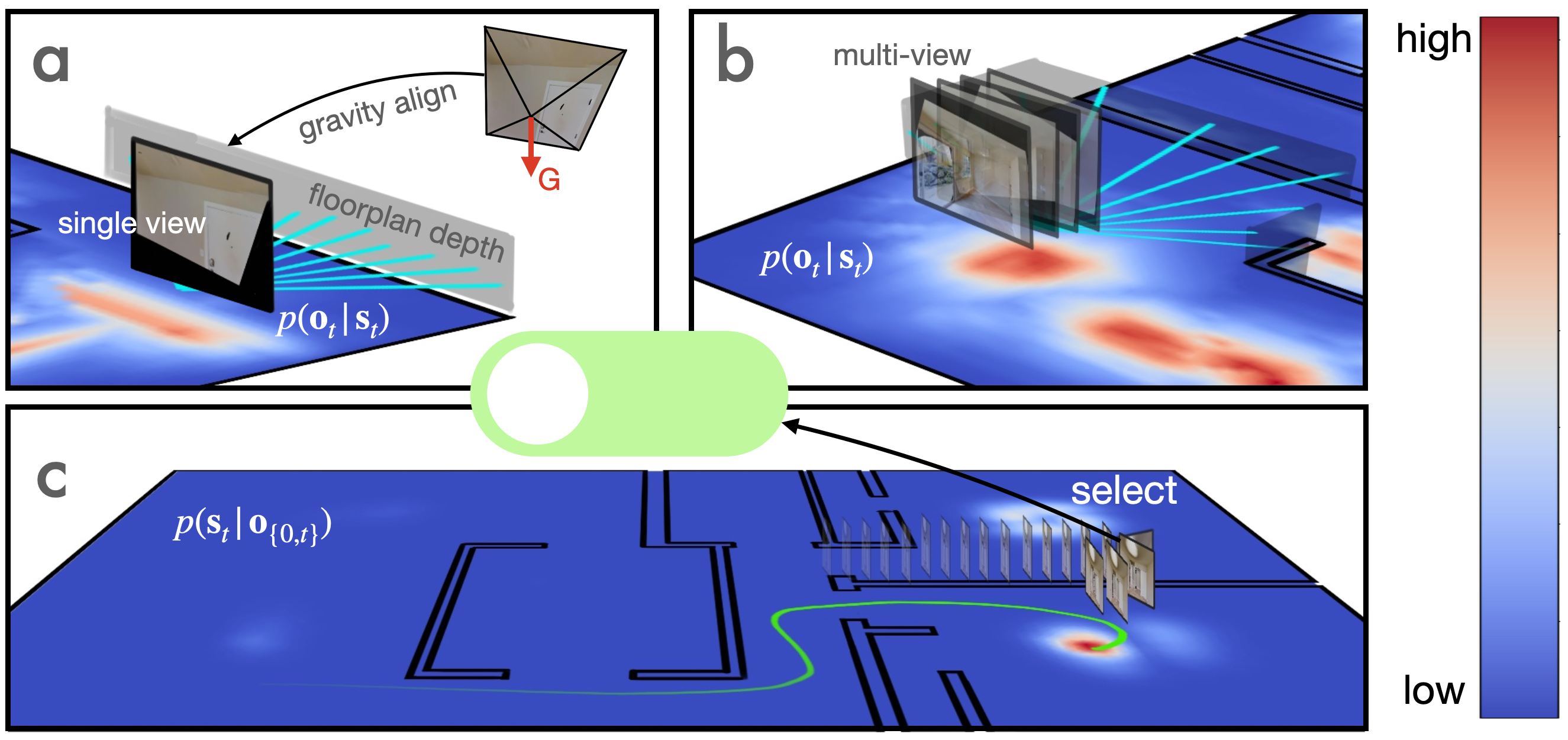

Floorplan data is readily available, long-term persistent and inherently robust to changes in the visual appearance. Our method does not require retraining per map and location or demand a large database of images of the area of interest. We propose a novel probabilistic model consisting of an observation and a novel temporal filtering module. Operating internally with an efficient ray-based representation, the observation module consists of a single and a multiview module to predict horizontal depth from images and fuses their results to benefit from advantages offered by either methodology.

Our method operates on conventional consumer hardware and overcomes a common limitation of competing methods that often demand upright images. Our full system meets real-time requirements, while outperforming the state-of-the-art by a significant margin.

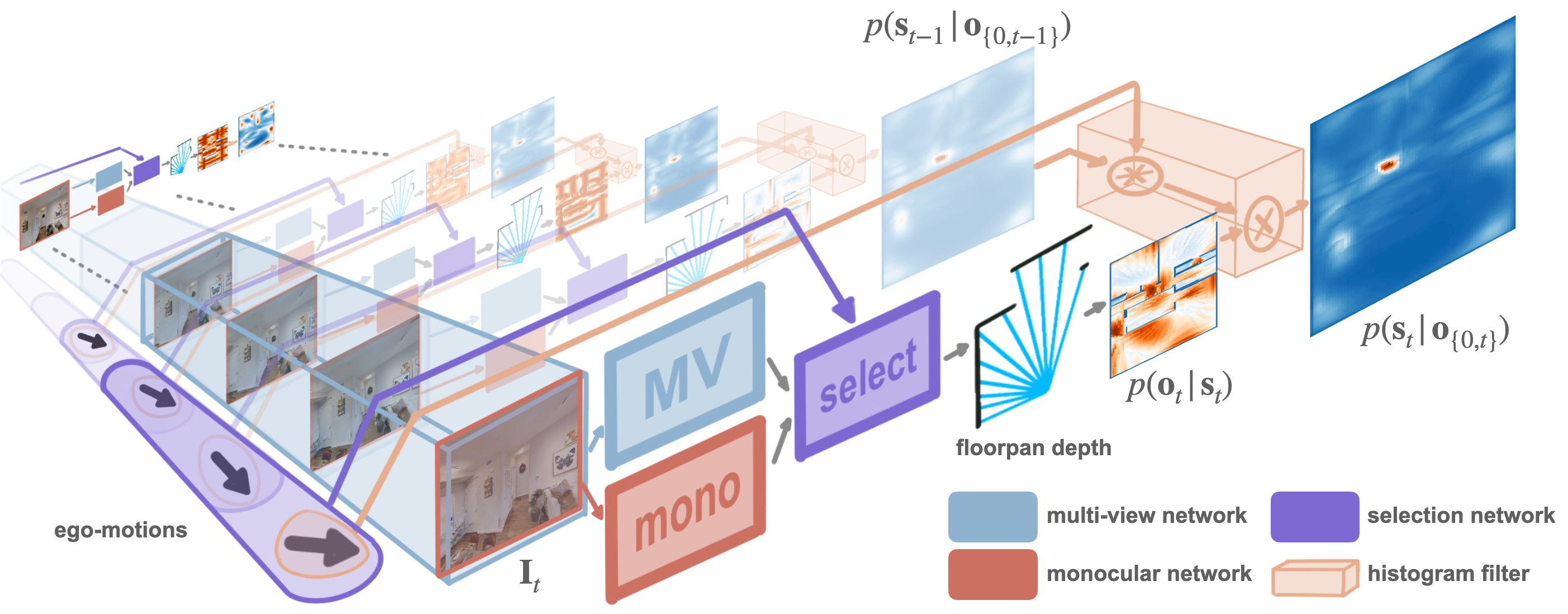

Our pipeline adopts a monocular and a multi-view network to predict floorplan depth. A selection network consolidates both predictions based on the relative poses. The resulting floorplan depth is used in our observation model and integrated over time by our novel SE(2) histogram filter to perform sequential floorplan localization.

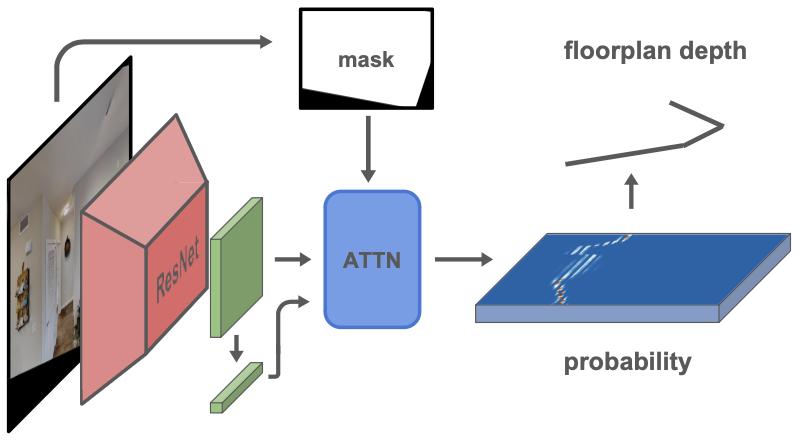

A gravity aligned image is fed into the ResNet and Attention based feature network. Invisible pixels are masked out in the attention. The network outputs a probability distribution over depth hypotheses and its expectation is used as predicted floorplan depth.

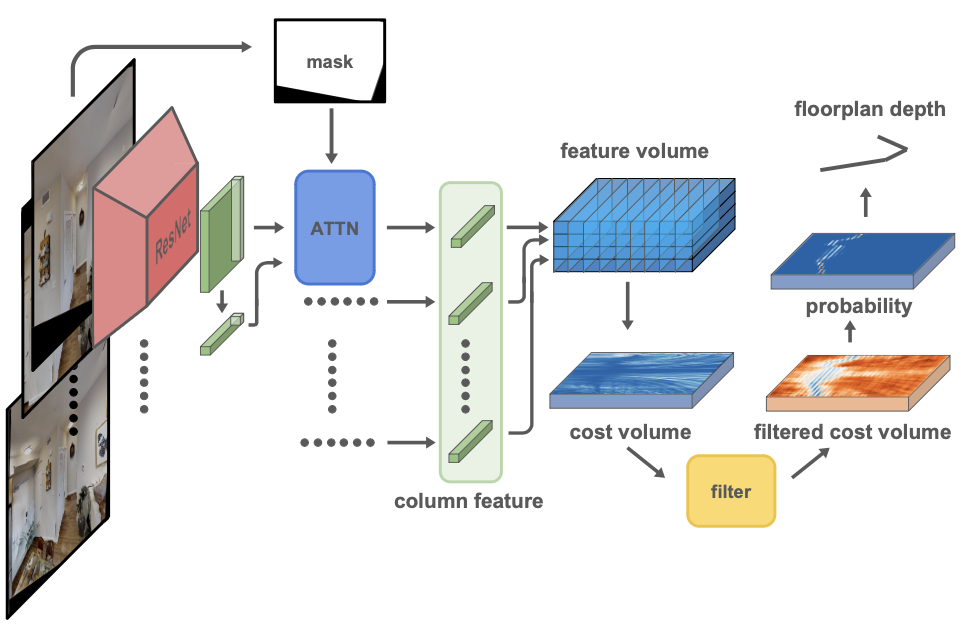

Column features of the images are extracted and gathered in the reference frame. Their cross-view feature variance is used as cost. A U-Net-like network learns the cost filtering to form a probability distribution, and the floorplan depth is defined by its expectation

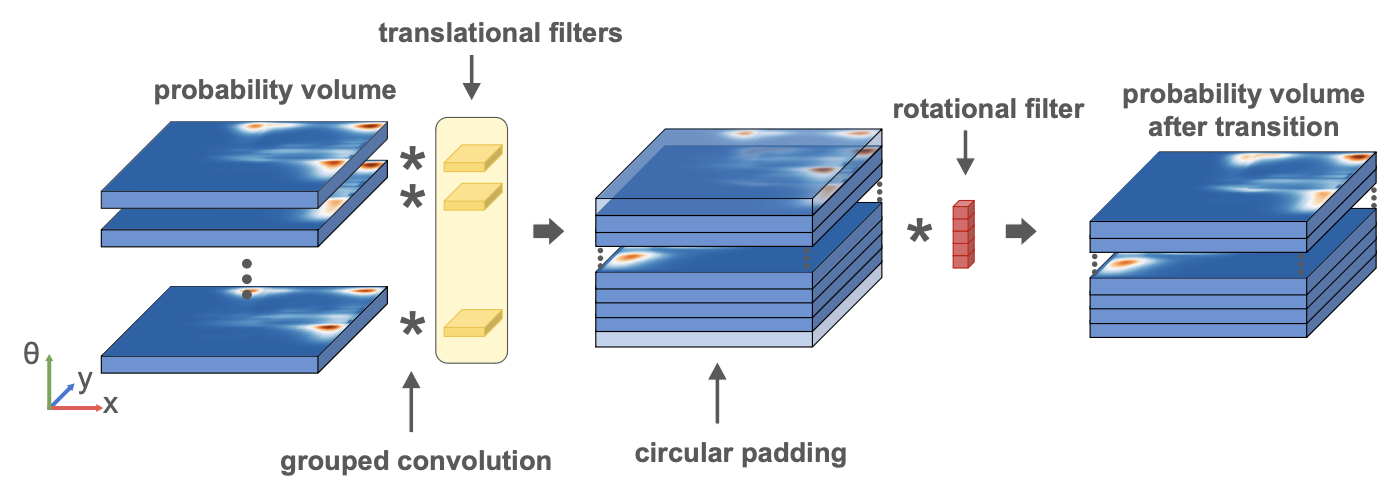

Unlike previous work operating on euclidean R2 state space, we work on SE2 and transit through ego-motions, so the translation in the world frame depends on the current orientation. Therefore, we decouple the translation and rotation and apply different 2D translation filters for different orientations, before applying the rotation filter to the entire volume along the orientation axis. The 2D translation step can be implemented efficiently as a grouped convolution, where each orientation is a group

@article{chen2024f3loc,

author = {Chen, Changan and Wang, Rui and Vogel, Christoph and Pollefeys, Marc},

title = {F^3Loc: Fusion and Filtering for Floorplan Localization},

journal = {CVPR},

year = {2024},

}